In this lesson, you will explore the layers of an operating system (OS) and their roles in managing computer resources. You will learn about the hardware layer, kernel, application layer, and user interface, with a focus on process management, file systems, and virtualisation. Using examples from Linux and Windows, you will discuss how these components interact to make computers efficient and user-friendly.

In this lesson, you will explore the layers of an operating system (OS) and their roles in managing computer resources. You will learn about the hardware layer, kernel, application layer, and user interface, with a focus on process management, file systems, and virtualisation. Using examples from Linux and Windows, you will discuss how these components interact to make computers efficient and user-friendly.

By the end of this lesson, you will be able to:

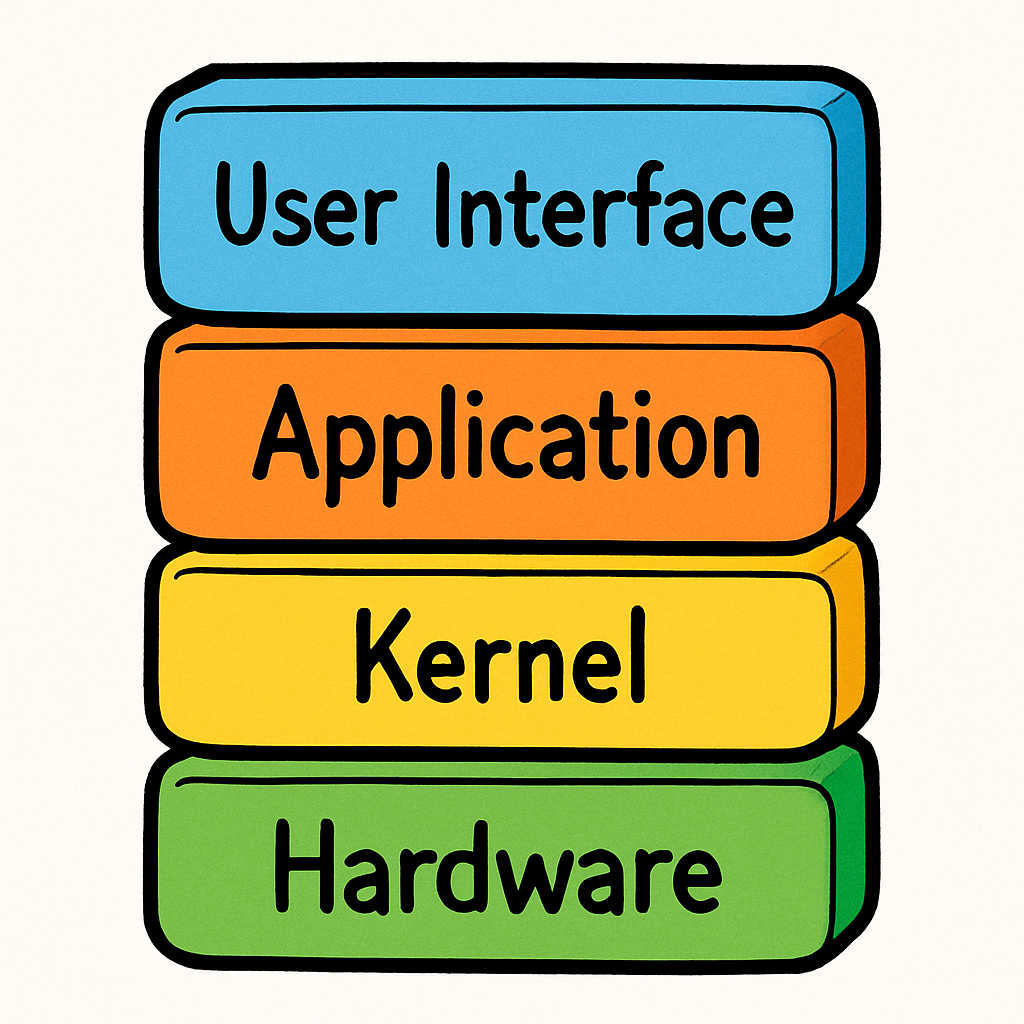

An operating system acts as an intermediary between users and computer hardware, managing resources and providing a platform for applications. It is structured in layers, each with specific responsibilities, to ensure efficient operation. Let's explore these layers in detail:

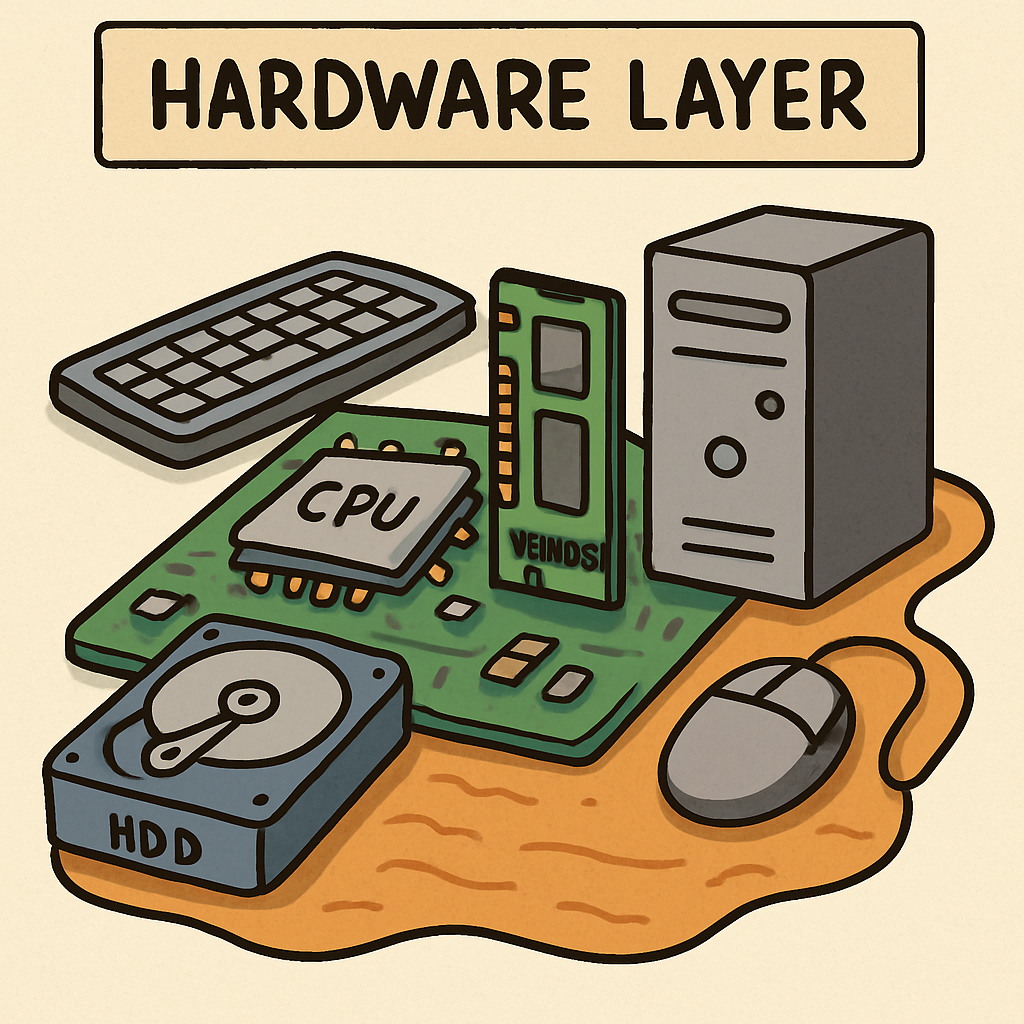

Hardware Layer:

This is the foundation, consisting of physical components like the CPU, memory, storage devices, and input/output peripherals. The OS interacts directly with this layer to control hardware resources.

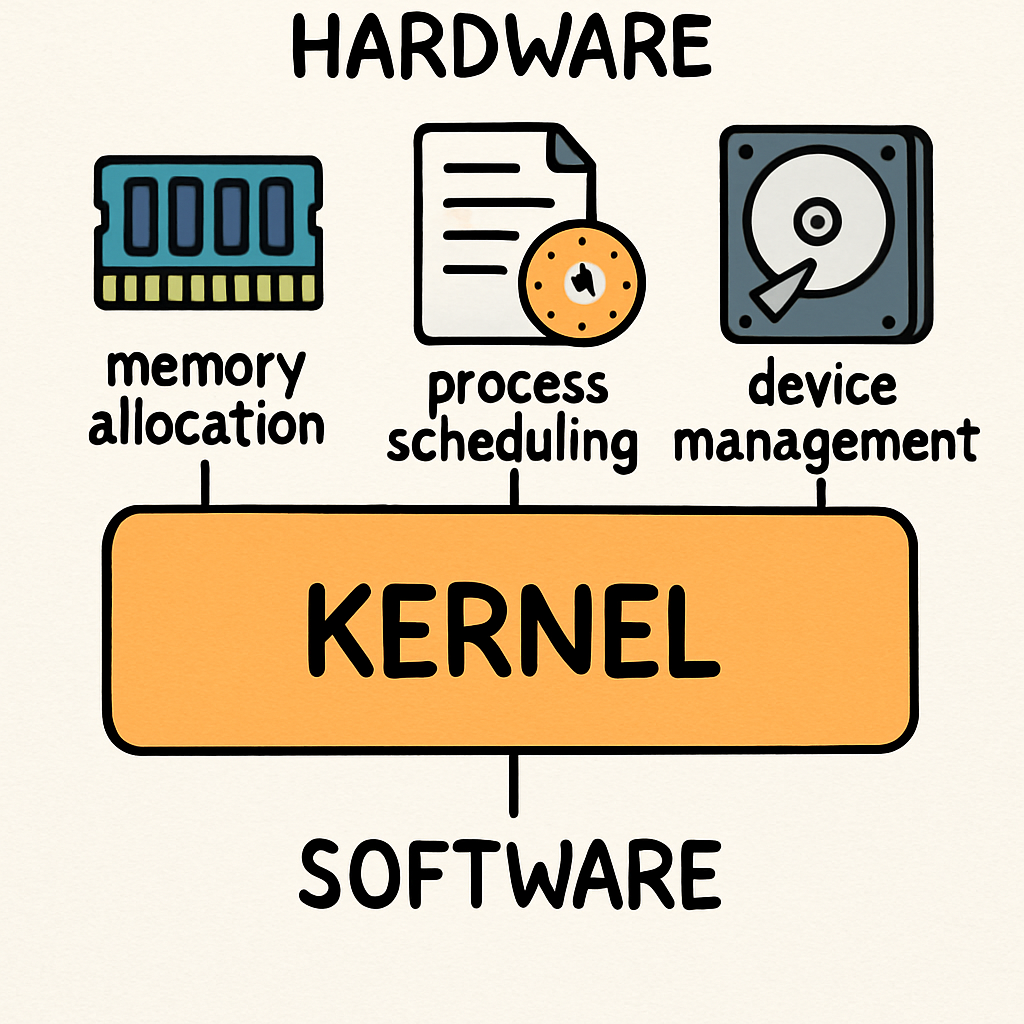

Kernel Layer:

The kernel is the core of the OS, handling low-level tasks such as memory allocation, process scheduling, and device management. It acts as a bridge between hardware and software. In Linux, the kernel is open-source and highly customisable, whereas Windows has a proprietary kernel focused on stability and security.

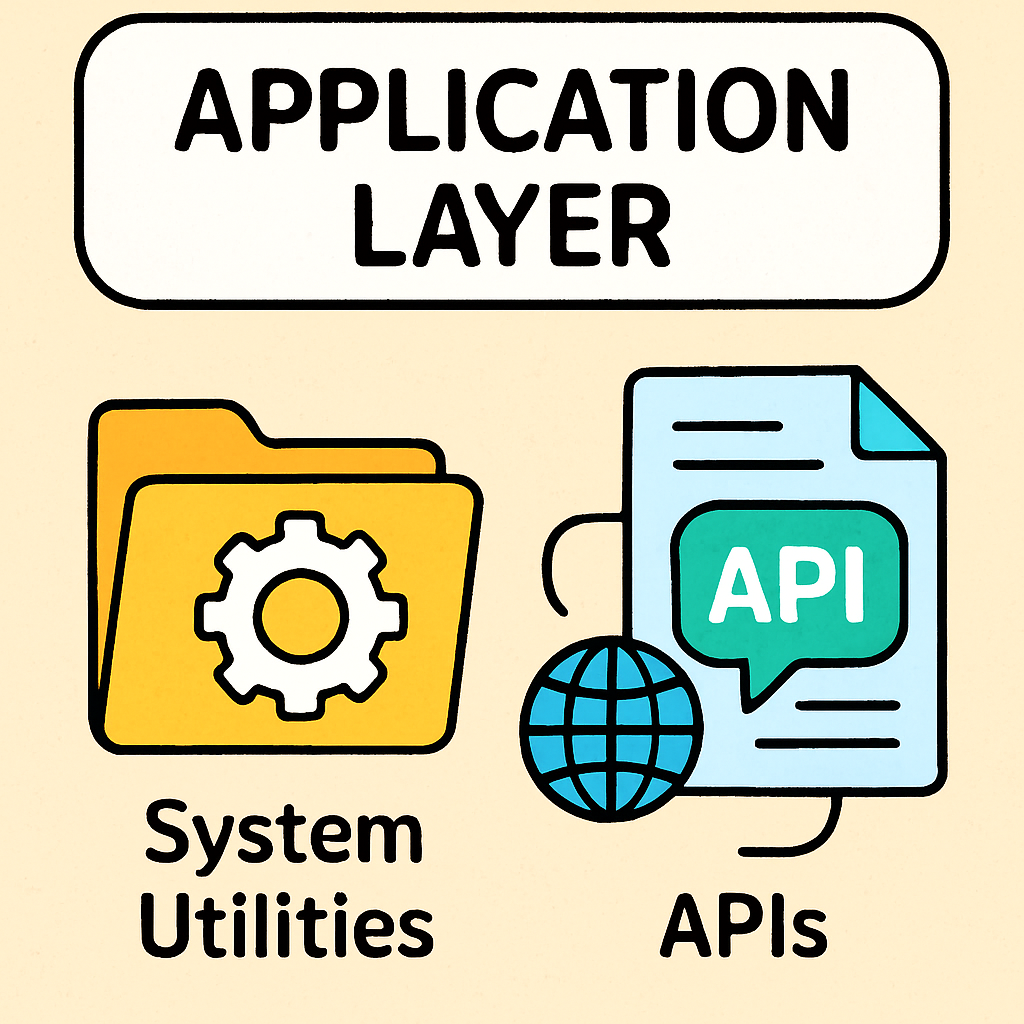

Application Layer:

This layer includes system utilities and application programming interfaces (APIs) that allow software to run. It provides services like file management and networking. For instance, Linux uses shells like Bash for command-line applications, while Windows offers APIs like Win32 for developers.

User Layer:

The top layer is the user interface, such as graphical user interfaces (GUIs) or command-line interfaces (CLIs). It allows users to interact with the system easily. Windows provides a familiar GUI with the Start menu, while Linux distributions like Ubuntu offer both GUI (e.g., GNOME) and CLI options.

Now that you've learned about the layers of an operating system, let's apply this knowledge to a everyday task: opening a web browser on your computer. This will help you see how all the layers work together in practice. We'll break it down step by step, explaining what happens behind the scenes when you click on your browser icon.

Now that you've learned about the layers of an operating system, let's apply this knowledge to a everyday task: opening a web browser on your computer. This will help you see how all the layers work together in practice. We'll break it down step by step, explaining what happens behind the scenes when you click on your browser icon.

Process management is a key function of the OS kernel, involving the creation, scheduling, and termination of processes.

Process management is a key function of the OS kernel, involving the creation, scheduling, and termination of processes.

But what exactly is a process? Think of it as a running program – for example, when you open a web browser or a game, that's a process in action. The kernel oversees these processes to ensure the computer runs smoothly, making sure no single program hogs all the resources.

It ensures efficient use of CPU time and resources by deciding which process gets to use the CPU at any given moment, like a traffic controller directing cars at a busy junction.

In Windows, the Task Manager shows processes and allows you to end tasks, using algorithms like priority scheduling to manage them – this means important tasks get more attention. Linux uses tools like 'ps' and 'top' for monitoring, with the kernel handling multitasking through time-sharing, where the CPU switches quickly between processes to make it seem like everything is running at once.

Examples: When you open multiple apps on Windows, the OS allocates CPU time to each process to prevent freezing. In Linux, servers run multiple processes simultaneously for web hosting, using virtual memory to isolate them – this means each process has its own 'space' in memory, preventing one from interfering with another.

Why is process isolation important for security? Consider a scenario where a faulty process could crash the entire system without proper management. For instance, if a virus infects one process, isolation stops it from spreading.

Take a few minutes a moment to open a process monitoring tool on your computer and observe the running processes:

Take a few minutes a moment to open a process monitoring tool on your computer and observe the running processes:

Spend a few minutes exploring: Identify a few processes, note their resource usage, and think about how the kernel is managing them in the background.

The file system is a crucial part of the operating system that organises and manages data storage on disks, such as hard drives or SSDs. It handles essential tasks like file creation, deletion, reading, writing, and access control, providing a structured way to store and retrieve information efficiently. Without a good file system, your computer would struggle to keep track of all your files, photos, documents, and programs, leading to chaos and potential data loss.

The file system is a crucial part of the operating system that organises and manages data storage on disks, such as hard drives or SSDs. It handles essential tasks like file creation, deletion, reading, writing, and access control, providing a structured way to store and retrieve information efficiently. Without a good file system, your computer would struggle to keep track of all your files, photos, documents, and programs, leading to chaos and potential data loss.

Different operating systems use different file systems to suit their needs. For example, Windows typically uses NTFS (New Technology File System), which supports large files, built-in encryption to protect sensitive data, and permissions to control who can access certain files or folders. This makes it great for secure environments, like in schools or businesses. On the other hand, Linux often uses ext4, which is known for its reliability and a feature called journaling. Journaling acts like a safety net – it logs changes before they're fully applied, so if there's a sudden crash or power outage, the system can recover without losing data.

Take a few minutes to explore the file system on your computer and observe how files are managed:

Spend a few minutes exploring: Identify a few files or folders, note their properties, and think about how the file system organises them, handles permissions, and ensures data integrity in the background.