In this lesson, you will explore the architecture of the CPU and memory in a computer system. You will learn about key CPU components like the ALU, registers, and program counter, as well as the memory hierarchy. Then, you will create a flowchart to visualise the fetch-execute cycle and understand how the CPU processes instructions.

In this lesson, you will explore the architecture of the CPU and memory in a computer system. You will learn about key CPU components like the ALU, registers, and program counter, as well as the memory hierarchy. Then, you will create a flowchart to visualise the fetch-execute cycle and understand how the CPU processes instructions.

By the end of this lesson, you will be able to:

The Central Processing Unit (CPU) is the brain of the computer, responsible for executing instructions. It handles everything from simple calculations to complex decision-making in programs. Let's break down its key components in more detail:

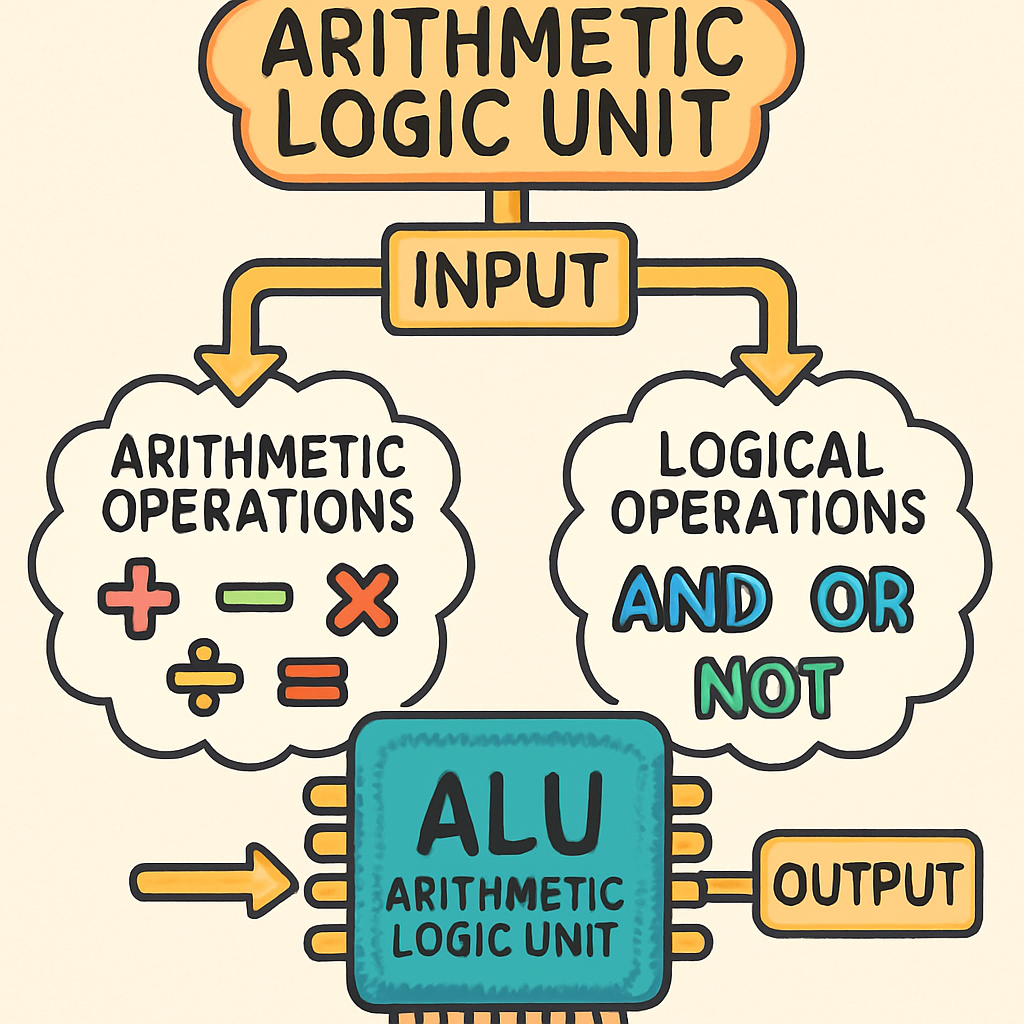

Arithmetic Logic Unit (ALU):

This is the part of the CPU that does the heavy lifting for calculations. It performs arithmetic operations (like addition, subtraction, multiplication, and division) and logical operations (like comparing values with AND, OR, or NOT). Essentially, whenever your computer needs to compute something, the ALU is where the actual work happens.

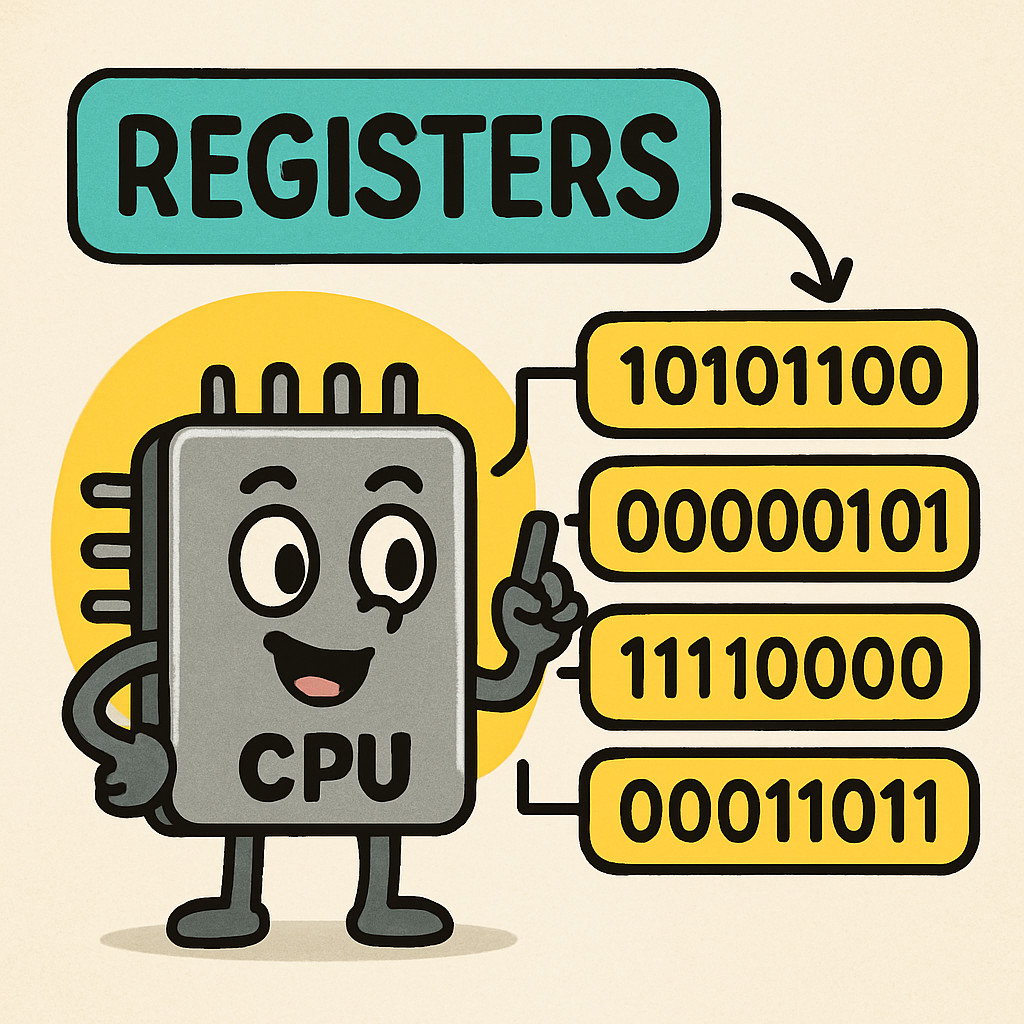

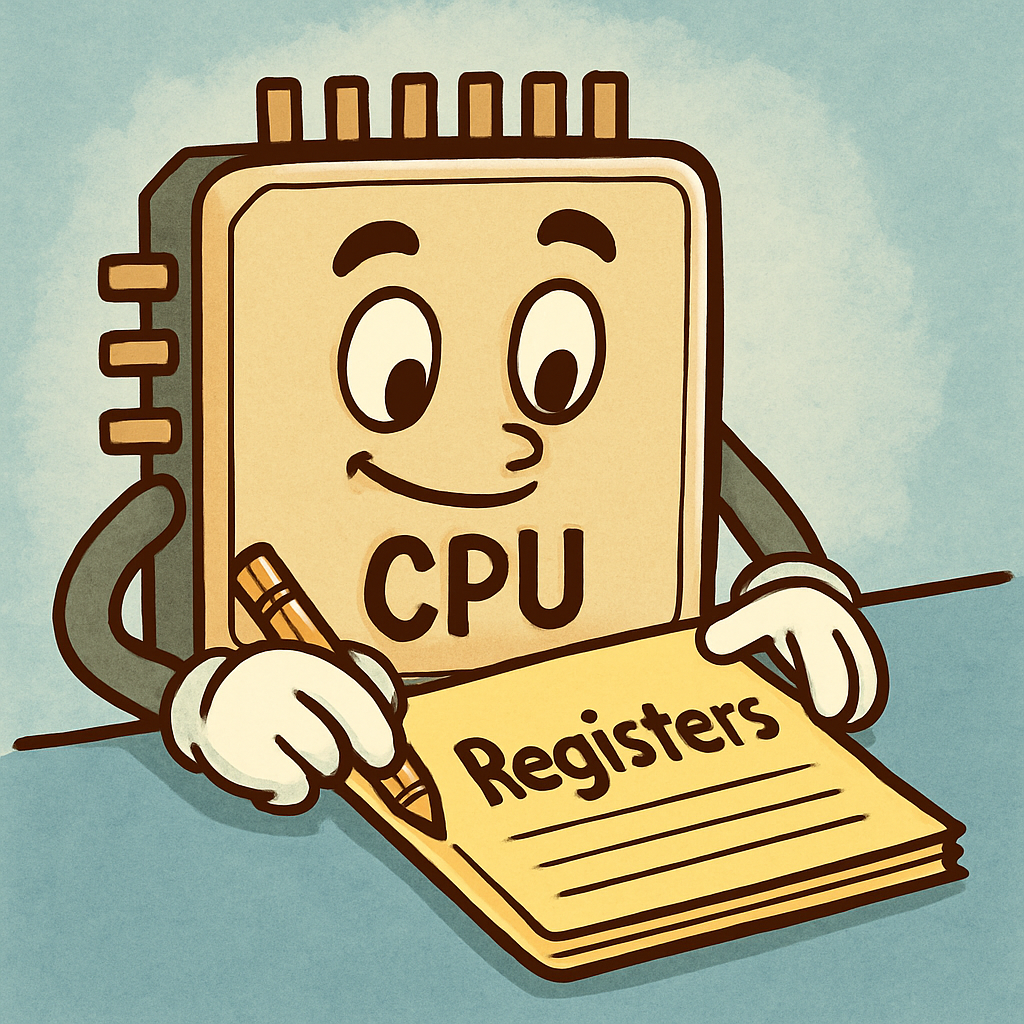

Registers:

These are like tiny, super-fast storage spots right inside the CPU. They hold data temporarily while the CPU is working on it, which is much quicker than fetching from main memory. For example, general-purpose registers can store any data, while special ones like the accumulator hold results of operations. There are usually only a few dozen registers, but their speed is crucial for performance.

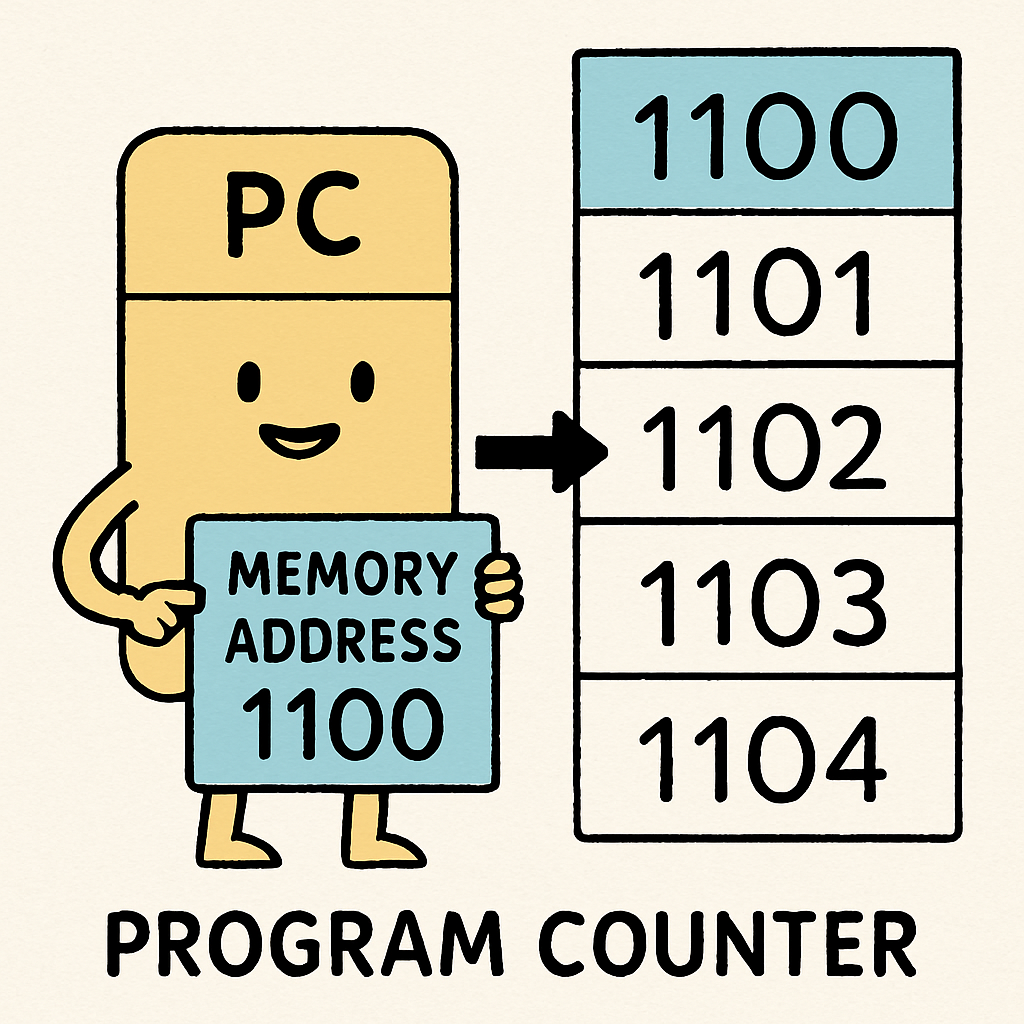

Program Counter (PC):

This is a special register that keeps track of where the CPU is in the program. It holds the memory address of the next instruction to be executed. After each instruction, it usually increments by one to point to the next, but it can jump to a different address for things like loops or if conditions in code.

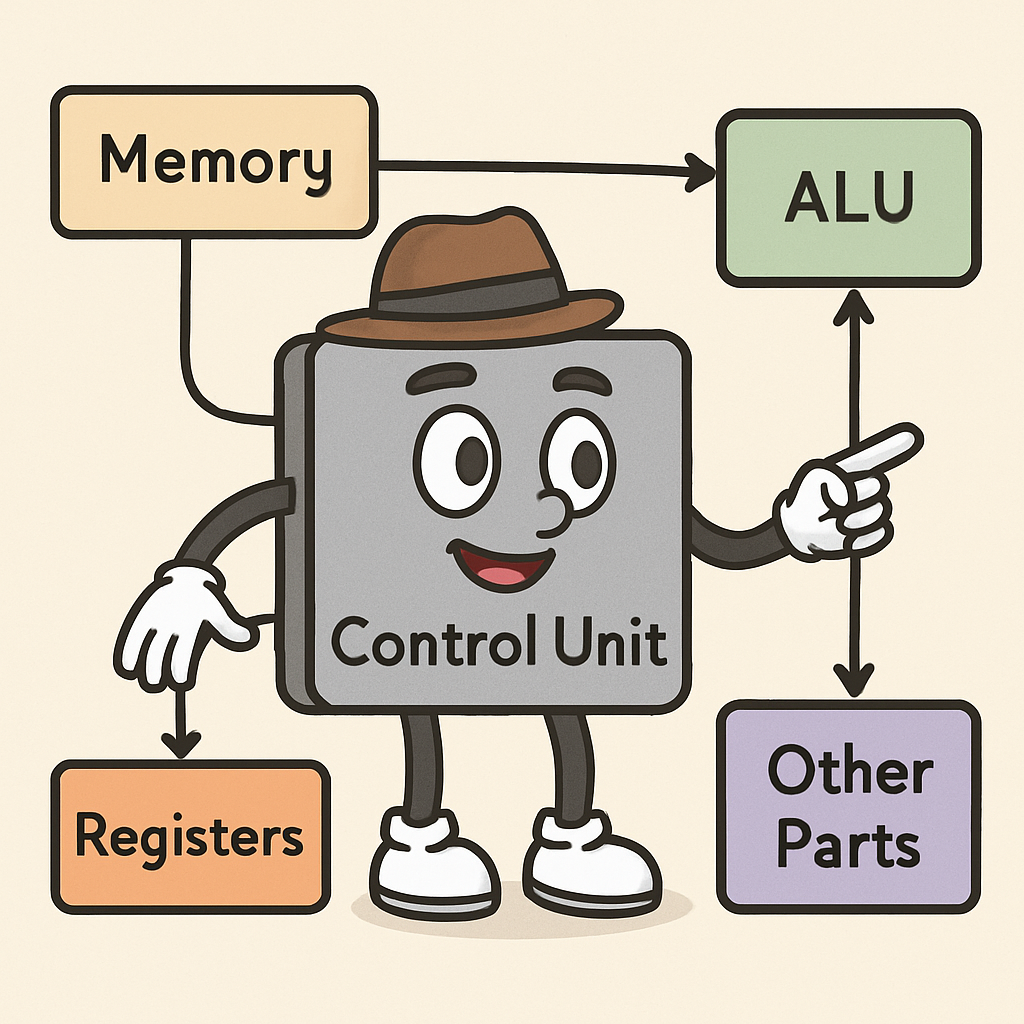

Control Unit:

This is the director of the CPU. It manages and coordinates the flow of data and instructions between the other components. It decodes instructions fetched from memory and sends signals to the ALU, registers, and other parts to carry out the operations. Without it, the CPU wouldn't know what to do with the instructions.

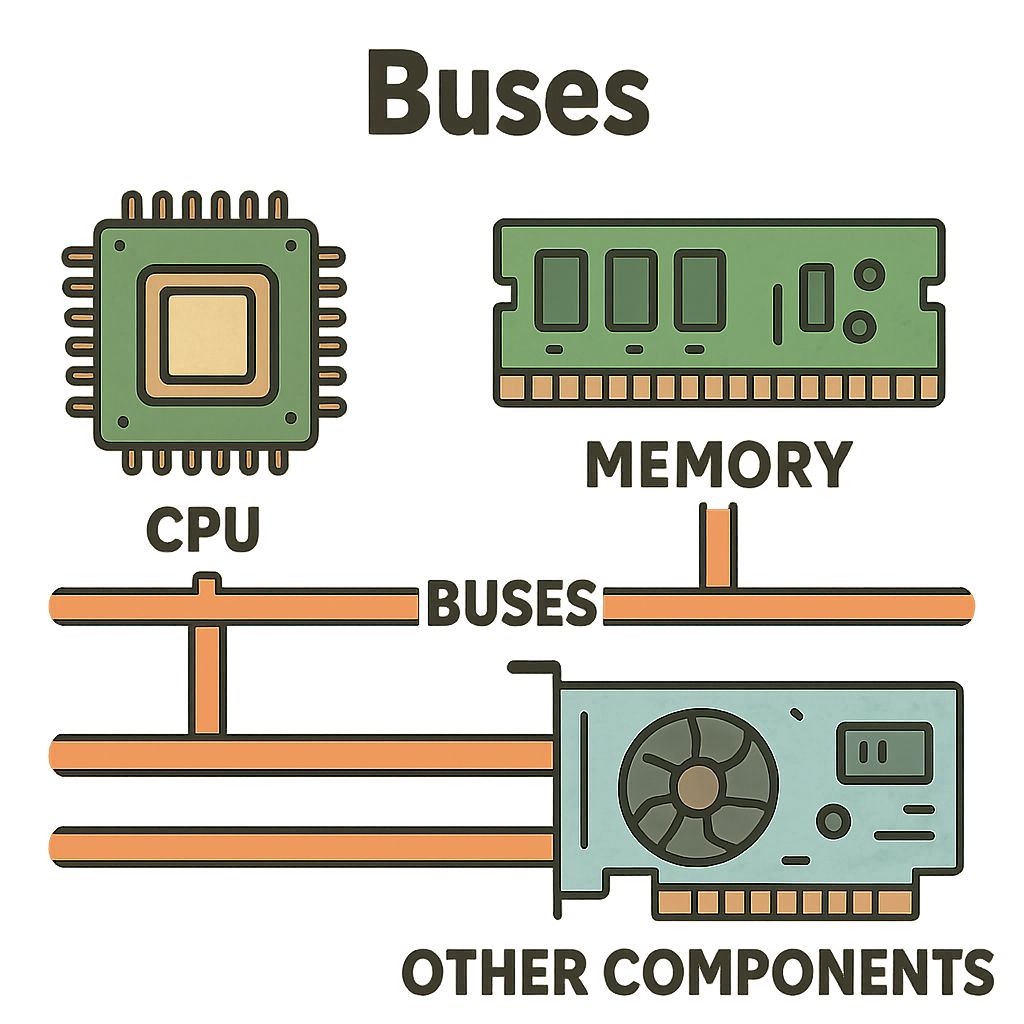

Buses:

These are the pathways that allow data to travel between the CPU, memory, and other components. There are three main types: the address bus (carries memory addresses), the data bus (transfers actual data), and the control bus (sends control signals). They act like highways connecting different parts of the computer.

In the next steps, we'll see how these components work together in the fetch-execute cycle to process instructions step by step.

Memory in a computer is organised in a hierarchy to balance speed and cost. Faster memory is more expensive and smaller, while slower memory is cheaper and larger. This setup ensures that the CPU can access data quickly when it needs it most, improving overall system performance.

Registers:

These are the fastest type of memory, located right inside the CPU. They are very small, typically only 16-32 per CPU, meaning there are usually between 16 and 32 such registers available in a single CPU (or per core in multi-core processors), but their speed allows the CPU to perform operations without delay. Think of them as the CPU's personal notepad for immediate use.

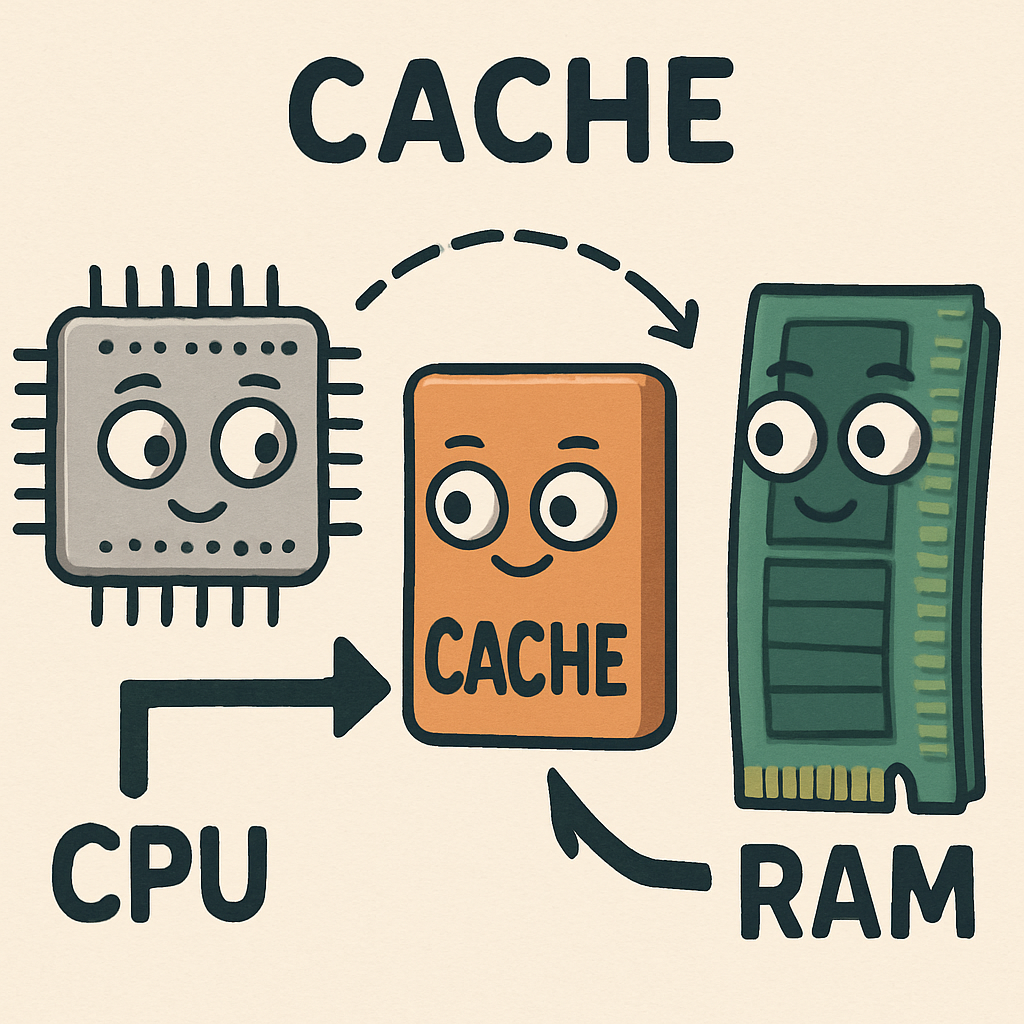

Cache:

This is a small, fast memory layer between the CPU and RAM. It stores frequently used data and instructions to reduce the time the CPU spends waiting for information from slower memory. There are different levels, such as L1 (the fastest and smallest, often built into the CPU), L2, and L3 (larger but slightly slower).

RAM (Random Access Memory):

This is the main memory of the computer, where programs and data are loaded when they're running. It's volatile, meaning it loses its contents when the power is turned off, but it's much larger than cache and registers, allowing you to run multiple applications at once.

Secondary Storage:

The slowest but largest and cheapest, including devices like hard disk drives (HDDs) or solid-state drives (SSDs). It's non-volatile, so it keeps data even when powered off, making it ideal for long-term storage of files, programs, and operating systems.

The hierarchy exploits the principle of locality: programs tend to access the same data repeatedly or data that's nearby in memory, so keeping it in fast cache or registers improves performance and efficiency.

Memory addresses are used to locate data, often represented in binary or hexadecimal formats for precision.

Now, let's see how the CPU interacts with memory in the fetch-execute cycle.

The fetch-execute cycle (also called the instruction cycle) is the basic operation of the CPU in the von Neumann architecture.

The fetch-execute cycle (also called the instruction cycle) is the basic operation of the CPU in the von Neumann architecture.

The von Neumann architecture is a computer design where both instructions and data are stored in the same memory, allowing programs to be treated as data and enabling flexible execution. It's like the heartbeat of the computer, constantly repeating to process every single instruction in a program.

This cycle ensures that the CPU systematically retrieves, understands, and carries out instructions one by one.

In the following steps, you'll simulate this in Python using simple assembly-like instructions.

The CPU components, memory hierarchy, and fetch-execute cycle work in harmony in the von Neumann architecture.

The program counter (a CPU register) holds the address of the next instruction, which is fetched from memory – often starting from faster cache if available, or RAM if not. This demonstrates the memory hierarchy's role in providing quick access to data and instructions, balancing speed and capacity.

During the fetch step, the instruction moves into the instruction register. The control unit then decodes it, directing components like the ALU for operations such as addition or logic. Registers provide fast, temporary storage for data being processed, avoiding slower trips to RAM or secondary storage.

In the execute phase, the ALU performs calculations using data from registers or memory. Results might be stored back in registers or memory, and the program counter updates to point to the next instruction. This cycle repeats, with the hierarchy ensuring efficiency: frequently used data stays in fast cache or registers, while larger programs reside in RAM and persistent storage.